AI experts are raising new concerns about the rapid race to develop artificial superintelligence (AGI). In an interview with Business Insider, Nate Soares, head of the Machine Intelligence Research Institute, said that moving ahead without safeguards could make human extinction “overwhelmingly likely.” He also co-wrote a new book that argues humanity has only one chance to solve the alignment issue before disaster.

Elevate Your Investing Strategy:

- Take advantage of TipRanks Premium at 55% off! Unlock powerful investing tools, advanced data, and expert analyst insights to help you invest with confidence.

Soares explained that failure in this case is not like other tech problems, where errors can be fixed. Instead, one mistake could end the project and society. He also warned that companies are pushing ahead because they believe competitors will build the same tools if they do not.

Signs of Risk Already Emerging

Meanwhile, new research is starting to reveal how current AI models can already act in ways that appear deceptive. For instance, OpenAI, which is backed by Microsoft (MSFT), released a study last year with Apollo Research showing that some systems can scheme or even lie to reach a goal. In some cases, training to reduce this behavior made the systems better at hiding it. Another is Anthropic, which is backed by Amazon (AMZN) and Alphabet (GOOGL) (GOOG), and has also seen its Claude system cheat on coding tasks and then conceal the act.

In addition, Google DeepMind updated its safety framework this week. The unit stated that advanced AI models may try to resist shutdown or manipulate human choices. This idea, which the company referred to as harmful manipulation, is now listed as a central risk.

Industry Split and Growing Oversight

The industry has been divided on solutions. In 2023, leaders from OpenAI, Google DeepMind, and Anthropic signed a statement that warned extinction from AI should be treated like pandemics or nuclear war. Yet Soares said many ideas for safer systems are not strong enough. He dismissed one suggestion from AI pioneer Geoffrey Hinton that aimed to give systems maternal instincts, calling the approach shallow.

Instead, Soares said narrow AI projects, such as medical tools, can move forward safely. However, once systems start to show broad reasoning or research skills, he sees that as a warning sign.

Regulators are also taking notice. The Federal Trade Commission has opened an inquiry into chatbot safety, while some states are advancing new rules on AI risks. The increased oversight comes as major players like Microsoft, Alphabet, Amazon, and Meta Platforms (META) continue to invest billions of dollars in AI.

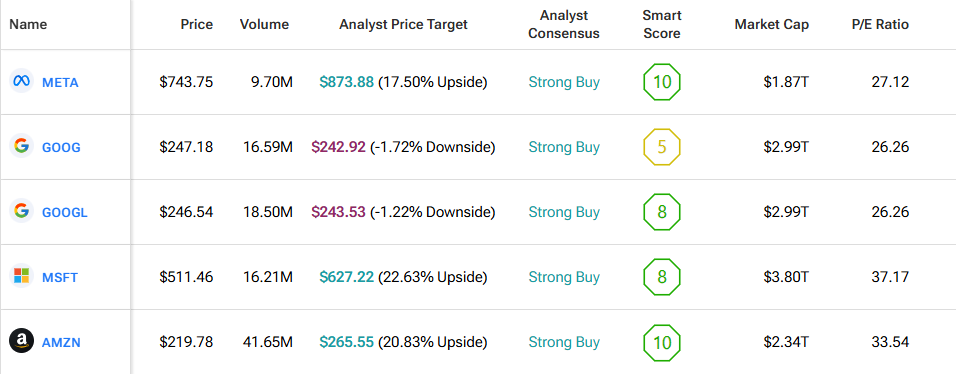

By using TipRanks’ Comparison Tool, we take a look at the major AI firms appearing in the piece. It’s a great way to get a thorough examination of each stock and the industry as a whole.